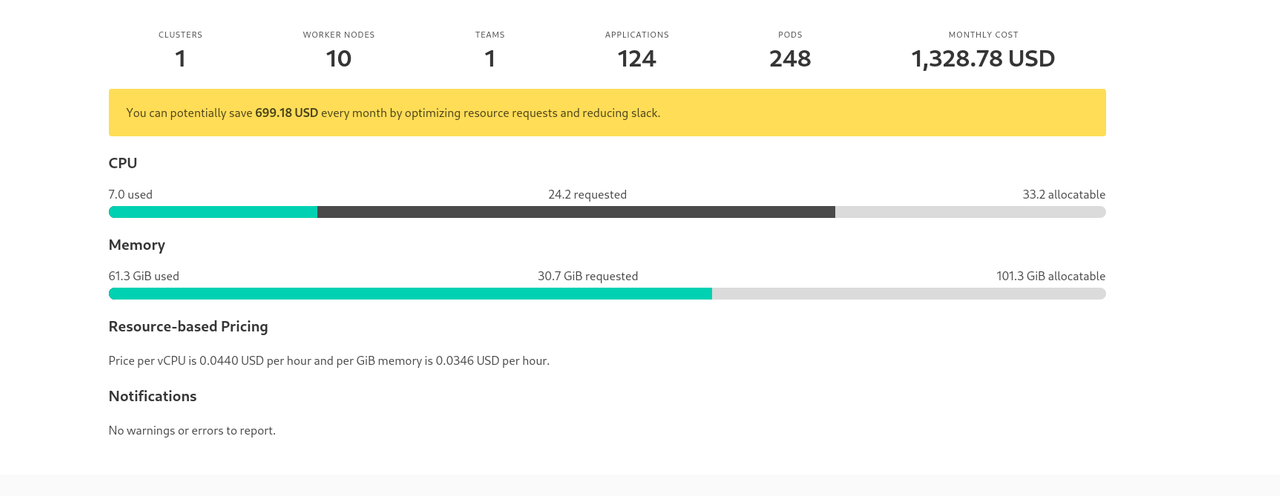

The first step to lowering you Kubernetes cluster costs is to analyze your resource usage vs node capabilities.

This can be done locally or via Helm with kube-resource-report . This tool will generate an overview which will help you adjusting your Limits and Requests.

- Install Kube-resource-report

Cluster Install with Helm

git clone https://github.com/hjacobs/kube-resource-report

cd kube-resource-report

kubectl create namespace reporting

helm install kube-resource-report ./unsupported/chart/kube-resource-report -n reporting

helm status kube-resource-reportGet the report by making an ingress for the service or kubectl port-forward to your local machine:

kubectl port-forward kube-resource-pod-xe13e24 8080:8080 -n reportingLocal Install

If you would like to run it locally you can use these tools which creates the same HTML as the cluster method:

#INSTALL POETRY WITH YOUR PACKAGE MANAGER

poetry install && poetry shell

mkdir output

python3 -m kube_resource_report output/ # uses clusters defined in ~/.kube/configExample of the Report:

2. Adjust your Machine type by creating proper node pool(s) based on slack, performance needs, and ideally set the Kubernetes Requests and Limits settings in tune with those two criteria. Defaults can help and per container specifics for your high priority or heavy load deployments.

Understanding limits is well said in official k8s documentation:

If the node where a Pod is running has enough of a resource available, it's possible (and allowed) for a container to use more resource than itsrequestfor that resource specifies. However, a container is not allowed to use more than its resourcelimit.

Dev Environment Machine Types:

- E2 (Google Cloud)

- Look up similar to E2 for your other cloud providers.

- Don't overallocate Disk Space.

Production cluster costs can also be decreased but take note of the historical usage during your peak times. Same can be said for dev environments but in production that costs money, so be careful when adjusting everything.